Simulating Conway's Game of Life with 100.000 Erlang Processes

I decided to play around with OTP recently. It is the core of the Elixir language, but I rarely use everything it has to offer. So, I decided on coding Conway’s Game of Life and made every cell its own GenServer. That means that every cell has its own Erlang process. But why? you might ask. Well, because … why not?! 😄

It took my three attempts to build a correctly running Game of Life since debugging distributed systems is HARD. In the first two attempts, I wrote zero tests and debugged the game mechanics by printing the game state after every round. That was a bad idea.

Cells weren’t spawning when they were supposed to. Living cells weren’t dying, although they should. I started logging every cell state, but that only increased the lines printed and didn’t lead to significant breakthroughs. I tinkered around for a few days but gave up eventually since it was hard to identify bugs and even harder to fix them.

On my final attempt, I decided to go down the TDD road, but not too strictly. Sometimes I wrote the test first and then the code. Sometimes I did the opposite. What mattered was that eventually, I had every case of the game tested and working correctly. I broke down the game mechanics into smaller steps and only tested those, like: A cell dies if it does not have three alive neighbors. or A cell notifies all its neighbors that it is alive. The tests helped me get the game mechanics right on the lowest levels. Then, I put the pieces together, and the game JustWorked™! I previously spent days fixing the issues, but this time, it only took me 2 hours to get the game working correctly. Go tests!

However, this post isn’t about TDD. It is about the decisions I made and performance tweaks I used to build Conway’s Game of Life and scale it to 100.000 erlang processes. I won’t show the actual code since the code wasn’t the problem. It was the how to write it that mattered. Therefore, I will rather describe key findings and highlight certain decisions instead of showing you raw code. You can always check out the code on GitHub.

Enough talk. Gimme the juice.

Let’s first talk about the architecture of the project. I decided on using a single LiveView (GitHub) to manage a single game. It allowed me to start and stop a game without interfering with other games played in parallel. The LiveView is responsible to start and stop all Cell-GenServers and update the UI.

The idea was to let the Cell-GenServers manage their own state and update their UI representation (their “UI-Cell”) through the LiveView. They also needed to communicate with their neighbors to count the number of alive cells in their vicinity. I used direct messaging over PubSub for this and will explain why later on.

I used a tick/tock-approach to update the game. A game round would start with the LiveView sending a :tick-message to all cells. The cells would then notify their neighbors if they were alive. Each cell would use these messages to count its alive neighbors. After a while, the LiveView would send a :tock-message and each cell would look at how many alive neighbors it has and update its alive?-state based on the Game of Life rules. In short, an alive cell with two or three live neighbors stays alive, otherwise it dies. A dead cell with three live neighbors becomes alive.

If a cell changed its alive?-state, it would send a message to the LiveView with its coordinates on the game board and the new alive?-state. The LiveView collected all these updates in a staging_grid until the next :tick message. By keeping the updates in a temporary variable, I avoided sending the entire game state to the UI whenever a cell notified the LiveView. The game round ended with the next :tick-message at which point the LiveView would update the game board with the staging_grid version.

The problem with LiveComponents

I first used LiveComponents to represent the cells in the UI with one LiveComponent per cell. However, that turned out to slow down the updates of the game board tremendously. Have a look at how slow the board builds up when the update messages are sent separately for every cell:

It took around 30 seconds to update the board, which was not feasible given that the board would have to update roughly twice per second. I tested sending the entire game state in a single message for every update. I discuss how I optimized the payload and found a weird update lag with morphdom below. When I sent the entire game state, the board updated almost instantaneously:

I removed the LiveComponents and rather rendered the entire UI through the LiveView (GitHub). Here’s a representation of the previous and final architecture:

Communication between Cell Processes

I decided on using direct messaging for this communication rather than PubSub. Using PubSub, I had to create 100.000 PubSub topics, one for each cell, which didn’t seem efficient to me. Instead, I named every cell process deterministically with the formula: #{lv_pid}-#{row}-#{col} where col and row are the x and y coordinates of the cell on the game board. lv_pid is the PID of the LiveView which spawned them. The deterministic naming scheme enabled me to send messages to cells without looking up their PID every time.

iex> lv_pid = self()

#PID<0.444.0>

iex> {:ok, cell} = GenServer.start_link(Cell, lv_pid: lv_pid, row: 1, col: 2)

{:ok, #PID<0.123.0>}

iex> process_name = "#{inspect(lv_pid)}-1-2"

"#PID<0.444.0>-1-2"

iex> send(process_name, :hello_there)

:hello_back

iex> send(cell, :hello_there)

:hello_back

Using the deterministic naming scheme, cells could easily send messages to their neighbors by generating their process_name as shown above. That meant that cells didn’t need to keep track of the PIDs of their neighbors, which removed a lot of complexity. Since the PID of the LiveView would always be unique and that the cells and their names were removed automatically when a LiveView stops, there could be no collision between cell process names. You can read more about process name registration here. You can find the Cell-Module here

Communication between Cells and UI

Since I already gave every cell the PID of the LiveView for the process name generation, it was trivial to send UI updates to the LiveView. Whenever the cell alive?-state changed, it sent a :set_alive-message to the LiveView. The LiveView would collect the alive?-changes in a staging_grid until the end of the game round. At that moment, it overwrote the game board grid with the staging_grid.

The update message containing the game state was significant in size. For only 10.000 processes, every message was around 400kb. I significantly decreased the payload with only a few steps. First, this was how I represented the cells at first:

<table>

<tr>

<td id={"cell-#{row}-#{col}"}

class={"cell #{if alive?, do: 'alive'}"}>

</td>

</tr>

</table>

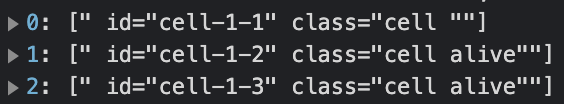

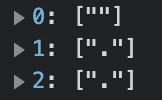

I gave every cell an id and added the alive class if the cell was alive. The payload looked like this for every update:

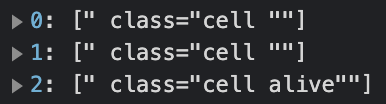

Since I didn’t need the id in the production code, I removed them, which decreased the payload size from 400kb to 230kb (43%). Now, the payload looked like this:

I also didn’t need the real class names. So, I replaced cell with c and alive with a. That decreased the payload by another 20% (down to 185kb).

However, the payload contained the class=c -part for all of the 100.000 cells. That seemed unnecessary to me, which is why I rewrote how I presented the alive?-state. Here is the before and after comparison:

# Before

.c {

width: 0.1rem;

height: 0.1rem;

}

.a {

background-color: #fff;

}

<td class={"c #{if alive?, do: 'a'}"}></td>

# After

td {

width: 0.1rem;

height: 0.1rem;

background-color: #fff;

}

td:empty {

background-color: #000;

}

<td><%= if alive?, do: "." %></td>

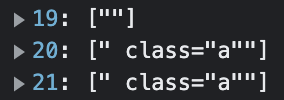

Thus, instead of toggling CSS classes, I rendered a dot in cells, which were alive, and used CSS logic to style non-empty cells. Now, the payload looked like this:

This decreased the payload by another 70% down to 56kb. However, it somehow added a significant lag to the rendering of the grid. See for yourself in this video of 40.000 cells being rendered. Do you see the lag between the message coming in and the rendering?

The td:empty CSS logic didn’t cause the lag. I removed the CSS condition td:empty and only rendered the dots. The lag was still there. My best guess is that the payload structure was different in such a way that caused morphdom to slow down tremendously, but further research is needed here.

Eventually, I settled on the following code (GitHub):

td {

width: 0.1rem;

height: 0.1rem;

}

.a {

background-color: #fff;

}

<td class={if alive?, do: "a"}></td>

With this version, the payload was still manageable at ~80kb per update, but the lag was gone. It only sent the class=a part for live cells and sent a [""] change otherwise.

The following video renders 40.000 cells without any lag.

Like what you read? Sign up for more!

Spawning 100.000 processes really, really fast

When I began a new game, the LiveView would first start 100.000 processes and link them to itself. Unfortunately, at first, this took up to 90 seconds! This seemed odd to me. I took to the ElixirForum to ask way smarter people than me. My first assumption was that spawning 100.000 erlang processes simply took a while. So, I asked whether spawning them asynchronously would speed up the process. It would not.

What was even odder was the fact that on other people’s machines, it only took 500ms to spawn that many processes! Eventually, Greg Vaughn had the genius solution: When I started a cell, and it randomly chose to be alive, it sent a message back to the LiveView to set its UI state to alive. That caused the LiveView to handle up to 50.000 messages since there was a 50% chance of a cell to start alive.

Usually, the LiveView would handle a lot of messages very quickly. However, in this situation, the messages from the cells to the LiveView somehow blocked or slowed down the spawning process tremendously. I first moved the sending of the message to the handle_continue/2-block of the cell. That didn’t change anything. Eventually, I removed sending back a message upon initialization altogether. With this fix, I could now spawn 100.000 processes in only 300ms! Thanks again to Greg Vaughn and all the others who contributed ❤️

Duplicating the World. Twice.

One last worry I had was that I copied the entire game state on every cell update. Whenever a cell sent a set_alive-message to the LiveView, I updated its state in the staging_grid. The staging_grid was just one giant map with 100.000 key-value pairs. Since maps are immutable in Elixir, this meant copying all the other 99.999 entries into a new map only to update a single value.

However, I wrote a little benchmark and was positively surprised to learn that updating a map with 100.000 key-value pairs takes only 500ns on average (that is Nanoseconds)! Even with 10.000.000 entries, the update took only around 1 microsecond, which is ridiculously fast (if one doesn’t do machine learning).

Before this test, the thought Elixir is amazing, but not super fast lived in the back of my mind. After this test, I safely deleted this thought. Sure, for a few use-cases, it can’t compete with e.g. C. But for real-life applications, it hardly matters. That somehow relieved me.

(Not) blowing up the hardware

While running the game with 100.000 processes, I used the wonderful LiveDashboard to monitor my machine and analyze the hardware consumption of the game. Unfortunately, I discovered that the memory usage slowly but steadily increased from initially 150mb to later on multiple gigabytes. I did not analyze this finding further, but I assume that the update frequency of the game was too high.

When a cell receives the :tock-message too soon after the initial :tick-message, it might not be able to handle all incoming messages from its neighbors in time before the next round starts. The queue of unhandled messages would build up over time, leading to more memory consumption per process. However, I did not confirm this assumption, and further investigation is needed here.

Putting it all together

With all the fixes and performance tweaks in place, it was time to put the game to the test! I recorded 3 minutes of the game and put it up on YouTube. The following video shows the game in real-time with 100.000 processes:

The end

Thank you for sticking until the end! I hope you could learn a bit and found this article somewhat helpful. If you have any questions, please don’t hesitate to message me on BlueSky or comment on my Tweet about this project. Until next time! Cheerio!